BST 3linden Minutes en

İçindekiler

- 1 Build Service Team – Offsite Meeting Nov 24/25, 2005

- 2 openSUSE build service goal definition (guided by Roland Haidl)

- 3 Requirements and use-cases (from reality and product management ;) , by adrian)

- 4 Architecture Overview (cschum)

- 5 Components, Source Storage API, External API, Tool Design

- 6 Next Milestones

- 7 Discussion of Packager

- 8 ToDo-List

Build Service Team – Offsite Meeting Nov 24/25, 2005

The following document covers a two day offsite meeting of the initial openSUSE build service team. It summarizes expectations, concerns, ideas and opportunities around the build service part of the openSUSE project. It is a rough collection of bits and pieces, neither complete nor final.

Please note that these meeting minutes do not reflect any official Novell statement or any binding roadmap or schedule. It is open for discussion and improvement and reflects solely the ideas and opinion of the individual participants. (sp)

openSUSE build service goal definition (guided by Roland Haidl)

Finding a name

Brainstorming for an external Name:

- Autobuild (would destroy the autoBuild-myth, if it goes wrong.)

- opensuse distribution service (Service Zentrale)

- "Build Service"

- BEST: Build Enablement Service Team

- IPE: Integrated Packaging Environment

- OSDF: Open SUSE Distribution Framework

- Perhaps even 'Build Server Environment (BSE) (using Cattle-Breeds as host names...)

Internal name:

- Build Service Team: BST

So, it is not just 'build server', but 'build service'. That is more than a simple server.

Risks

(number of votes in parenthesis)

unclear goals (6)

- need technical specifications (1)

competing teams (5).

- internal competition: Rudi's team and Klaas' team think and work differently. -> Try teamwork?

- external competition (Fedora,DEBIAN, -> good motivation) (2)

low acceptance

- within SuSE/Novell (4). Management / Colleagues (US)

- among the community (3) -> provide easy access, transparent processes, involve upstream

lack of communication (4)

- internal

- external -> Transparency&Involvement [but this is off-topic for a technical meeting like this]

Resources (3)

- enough available Hardware (3)

- Unstable Xen (3)

- People

- Network -> this (all) depends on acceptance (3)

- Novell's Commitment (5)

Chances

Proof that Novell really goes LINUX (5):

- send a positive signal to the community.

More transparency (3):

- open up our build technology. Processes.

- perhaps even source code of the build system

- better community involvement

Larger user base and more developers(4).

More software packages:

- who takes control?

- different views on legal issues.

- 3rd party involvement (e.g. real networks could build RealPlayer using [our / an own] build server).

More fun at Novell (3):

- good motivation

Obsolete old systems:

- Obsoleting PDB could be a big improvement.

- Autobuild also needs a renewal

Usability (3):

- We can provide different Frontends, e.g. for beginners and experts

The SUSE Platform:

- could profit through improved acceptance and stability.

- improved interaction with the community (3)

openSUSE build service is not...

- a huge software-repository like sourceforge. We will link to/from and cooperate with existing repositories, and keep only a source repository to recompile after changed dependencies.

- a Developers-CVS. We won't have good support for frequent edit-compile-test cycles.

- a place for swapping warez. We don't support upload of binaries, only sources. (Binary RPM upload is required for bootstrapping a new distribution, so this is a feature for 'later'.)

Requirements and use-cases (from reality and product management ;) , by adrian)

Use Cases

(sorted by availability)

- Build a single new package

- only a leaf-package that does not affect dependencies.

- only a simple package, one spec-file

- but for multiple distributions and architectures

- Modification of an existing package.

- Testing a bugfix

- contributing a patch.

- A partner builds closed source packages.

- on a dedicated system?

Requirements

(as extracted from the use cases)

- We need to support external build-hosts.

- Sources can be hosted externally.

- Closed source service.

- communication mechanisms (later). We need to support existence of multiple instances of our 'build server. (Naming, (User-IDs, etc.)

- Projects. A project is a logical collection of packages (like e.g. KDE5) or just a container for a single package.

Support home-pages (Wiki, bugzilla, tracking, etc) per package.

Supported Usergroups

(as extracted from the use cases)

- Open-Source developer.

- Download-customer (end-user).

- Packagers (e.g. packman.links2linux.org)

- Closed-Source customer (3rd-party company)

- Novell inhouse developers are not special.

They must categorize as one of the above.

External Communication

We need to check our results for inconsistencies, goofs, and omissions, before going public.

- In Q1-2006 we will have a Build-Server, allowing add-on packages from external sources.

- Further announcements later.

Can we define a more detailed time-line?

Architecture Overview (cschum)

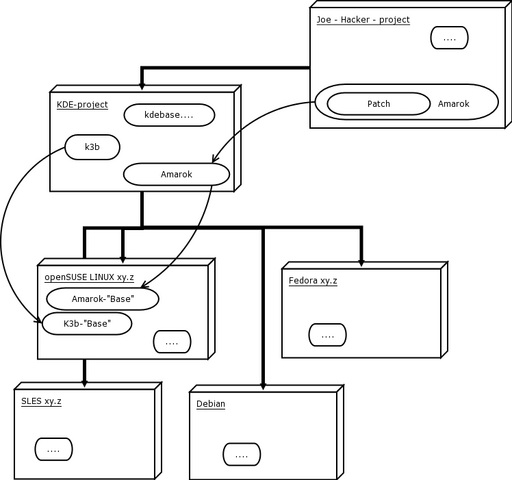

The software architecture allows for multiple Base-Operating Systems (SLES, openSUSE, Fedora, Debian are shown above). Each Base-OS may support one or more architectures (Hardware Platforms).

Users create a project-space for building their software. A project-space can be 'on top of' one or several Base-OSes. A Base-OS is a fall-back for packages that are not contained in the project-space. A typical project-space is e.g. kde5, containing a future release of the K Desktop Environment (shown as KDE-Project above). Such a project can build its contents against multiple Base Operating Systems, resulting in multiple sets of binary RPMs.

When looking up packages, we distinguish between a package in the project space that is a new package or a replacement for a package in the Base Operating system. (kde5 may or may not be part of the base OS). A package in the project space can also conflict with packages int the Base OS, e.g. kde4 is present, although the project wants to build kde5.

Once kde5 was successfully built, other packages can be tested on top of it. In the picture above, the kde-project took the standard sources of k3b (by referencing the sources found in a Base OS) and can now build k3b using the new kde5 libraries.

As an additional Illustration, let us assume, Mr. Joe Hacker created a feature-patch for amarok. He creates his own project-space for the patch. He does not want to check which Base-OS may be a suitable build environment for his patch, so he looks for a similar project. He chooses to apply his patch against the kde5 project (precisely: He'll build an amarok, using sources as found in the kde5 project (or in the base OSes) and his patch). This means that his build targets default to all the targets supported by kde5.

A build target is defined by a layered path of projects and Base OS and a hardware architecture. This layered path will be called platform.

Rules and Remarks

- One Base OS is the 'default Base OS', others (e.g. Fedora) are available as alternatives to the default.

- There will be far too many possible paths through projects and Base OSes. Build-server-administrators may be needed to keep the number of platforms low. E.g. by not allowing old SUSE7.x Base OS for most projects.

- For every once-released binary RPM, our source repository will be able to provide the sources, so that code can be reviewed and patches applied if e.g. needed for security-screening. This also means, we need to keep track of the exact project layering that was used, and need to track all the dependencies that were used.

- Legal issues. openSUSE build services will act as an ISP. We won't do e.g. license reviews upon each upload. We'll review (and possibly ban) problematic code, when it is pointed out.

- How will binary RPMs (used as dependencies) be added to the repository? A direct upload won't be allowed, everything must be built from source. A binary wrapped into a tarball and a spec-file may look like source, but still violates our rules.

Some packages need binaries of themselves before they can be built (Java, Gcc, Haskell). This calls for a build-server-admin. A new project may be based on a proprietary package (e.g. RealPlayer).

- A Submit Request ( or proposal): Once he has demonstrated that his patch works as desired on the target platforms Mr. Joe Hacker may want to notify the admins of project-kde5. The project admins may then consider adopting the patch.

- We don't build Binary-RPMs to run on multiple distributions ( – not even where possible). We build many Binary-RPM instead -- one per architecture and platform.

- Access rights: As every source and patch is contained in a well defined project space, we don't need to have contracts signed or any other formal authorisation. Everybody is allowed to create and maintain their own projects. We will only do user authentification against projects.

Projects may want to merge. We need mechanisms to detect related (or even identical) projects. What shall happen to depending projects, when a merge changes the platform structure. Maybe have a state 'frozen'?

- How to define Platforms for a project? Perhaps by search paths for RPM.

- Is there a tool to find a matching Platform for a users machine?

- To assist managing the system, we establish disk-quotas, user-rating and voting mechanisms. The size of the user base is also a good metric for evaluating a project.

- Scheduler could use such metrics for proirizing the platforms.

- trax was proposed as a tracking system. Nevertheless we need a good naming convention for layered projects, so that bug reports can identify all the needed details of the platform. For using bugzilla.novell.com, it was proposed to assign own categories to a project/platform if a sufficient user base and/or a sufficient package count was established.

- To assist bug reports, Binary-RPMs need to contain enough information to recover their complete build environment and their source.

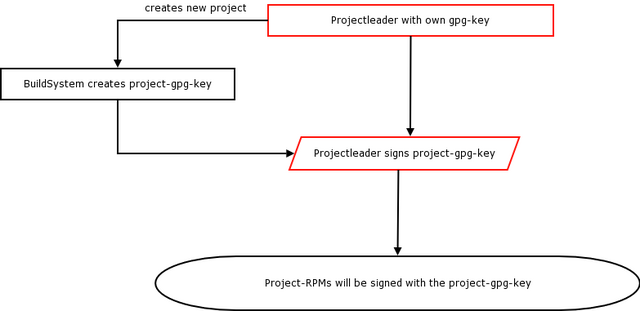

- A way for signing RPMs was discussed. The first idea was that every project should be able to sign their RPMs. We cannot request that project leaders upload their private GPG-key into the build-server. We will have the build-server to automatically create a Project-GPG-Key. A project leader can then sign the Project-GPG-Key. Layererd projects pose a problem, as an RPM-package can only contain one signature. Also, the benefits of signing by-project where not found to be clear. A sufficient alternative may be to sign RPMs only by the build server.

Components, Source Storage API, External API, Tool Design

(architecture details, mls)

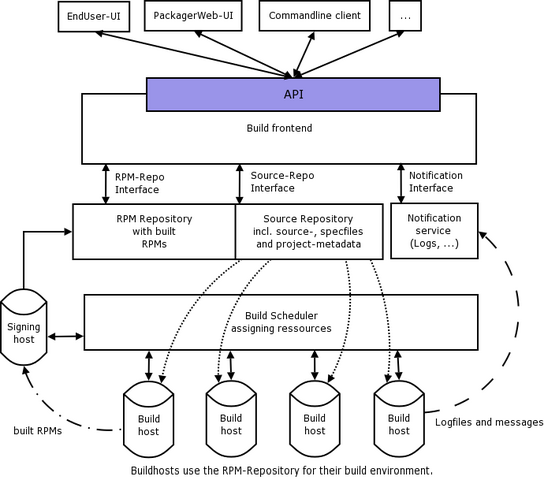

Web-based user interfaces and a command-line interface shall be available. Both the Web UI and CLI-client use the same Application Programm Interface. This API is provided by a build-frontend machine. It handles uploads and downloads and manages all meta-data. After uploading to the build frontend machine, a package is next checked in into the source repository. The build scheduler takes code from the source repository and dispatches build jobs to several build hosts. The build hosts return RPMs, which are stored in the RPM repository – to be downloaded via the build frontend API. The build hosts also return log files and events, which are passed to a notification service – also to be monitored via the build frontend API.

Trust levels and signed RPMs

The significance of a signed RPM was discussed. We could not decide if a GPG-signature shall/can represent a trust level. A projects trust level depends on the entire chain of project admins, who accepted contributions or otherwise influenced the packages. The weakest link (least trusted person) defines the trust level of the entire project. Key signing works differently. Trust is already established if one of a group signs a key. We obviously need different semantics here.

Open issues:

- Is a GPG-Key really a trust level?

- Who shall have a GPG-Key? People (admins, contributors, ...), projects, build-hosts, the entire build service?

Elements of the build-frontend

- Staging. Files are collected here during upload. Files that are already in the SourceRepository are taken from there (Checked by e.g. comparing MD5sums).

- Versioning. File-revisions are assigned before check in. The system uses small integers (strictly monotonic). We don't rely on tar-ball version numbers. This means, that we actually count check-ins, not file changes. A file may be unchanged, although available under several version numbers.

- presents meta data through the API. User Interfaces must have access to UI sufficient meta-data, so that it can present e.g. projects and Base OSes in a reasonable way. Sorted by activity, by relevance, ...

Package.xml + project.xml

A projects or packages' metadata is capsuled inside an xml-file, that travels through the system, along with the package or project. It contains

- package.xml has a list of files.

project.xml has a list of packages.

- user permissions,

- require/conflict/provide lists,

- defines remote sources for tar-balls.

- enumerates platforms, where this package/project can be built (or a negative list, where it shall not build).

- all information for init-build-system.

Interfaces

- openSUSE API

- SrcRep Interface

- RPM Repository Interface

- Notification Interface

The openSUSE API provides the functions of the other three interfaces as transparently as possible.

Source Repository Interface

- HTTP

- It presents simple objects (no SVN, no webdav).

- The following syntax was proposed for uploading:

PUT http://upload.os.org/sources/<project>/<package>/source files ... PUT http://upload.os.org/sources/<project>/<package>/package.xml PUT http://upload.os.org/sources/<project>/project.xml

Do we need different upload addresses (front-end machines) to support private projects?

- has an authentication-module (either in Frontend or in SrcRep) that is easily exchanged. We need to connect to iChains later.

- Versioning:

current version: URL

older version: URL?version=...

- has mapping between MD5sums and version numbers. Syntax for listing all version numbers when a file was actually changed: URL?lookup=history

RPM Repository Interface

- HTTP

- GET http://download.os.org/rpms/<platform>/<project>/<package>/bin-files ...

- should be able to generate views. E.g. select project first, then platform.

Notification Interface (internal)

Status, logs, Errors, events, etc are also stored recorded in the RPM repository. Shall not be mirrored, and are more dynamic. The Notification Interface supports simultaneous connection to multiple build frontends.

It delivers abstract events for:

- build status (restarted, errors, used packages [dependencies], ...) Supports callback URLs, HTTP POST.

- logs as streaming objects, while building, static later.

Notification Interface (external)

The external notification interface is part of the openSUSE-API. Here are several addition notification services available that can be sent to a UI: ratings, users, transaction starts, source code changes...

Ideas for implementation:

- jabber protocol? IRC? RSS feeds? ...

- UI client opens a server port, and registers as a URL-callback.

- UI client registers a mail-in interface as a URL-callback.

- UI client connects a notification channel. With keepalives and auto-reconnect, ...)

Source Repository

Discussed features:

- meta data of old versions should be stored. (may be needed when creating a STABLE distribution)

- Do we need locking? Not now. May be an option on the frontend, but always advisory locking only (compare CVS, SVN, ...)

- For consistency, we provide transactions for updating projects. This can be implemented by placing package version numbers in the project.xml file. Usually there are no such package version numbers in the project.xml file, so the latest available version of each package is taken.

- When storing a tar-ball, we prefer to store its remote URL and md5sum. But storing the tar-ball itself is also available.

- Is there a way to force a consistent snapshot? Probably not (and rarely needed).

Build Hosts

Shall use a virtual XEN environment. This virtual server always starts from an empty image. It then loads the build system and all needed RPMs, as these packages are potentially insecure. Inside the XEN server the build script will run in a change-root-jail, so that logfiles can be guaranteed to exist after XEN exit.

Next Milestones

Responsibilities

Main contact for everything: adrian

The interfaces below the build frontend are a natural way to divide the work in two teams. The teamleads freitag & ro dispatch and define tasks among their team.

- Everything should be possible through the command line interface.

- A CLI is also needed for API-testing.

- All sources that are part of openSUSE are managed via the API, no internal tools are allowed.

- An interface like 'ci_new_pac' via openSUSE API is high priority.

First milestone

The early prototype shall support only one use-case, as defined by the following workflow:

- A user checks in packages into an 'add-on' project. This project exists ontop of one Base Operating System. No layering of projects, no modification of the Base OS.

- The packages are built and the user gets notifications.

- Packages are saved in Source- and RPM-repositories and are offered for download.

We need

- a basic project management.

- packages, that are like our current autobuild packages: a source tree and a spec-file, that possibly defines subpackages. We restrict to on spec-file per package.

- Support for multiple architectures.

- the openSUSE API, well defined and implemented. We still allow internal interfaces to change.

- An authentication module running against a local user database. Ichains support can be added later.

First milestone is presented on FOSDEM, at end of February 2006.

Next Milestones

defined by use cases:

Use case 2 (“Contribuitions�?):

- User applies patches to existing packages.

He creates an own project for testing and maintaining his patches.

He then makes a request to have the patches accepted upstream.

- In parallel and at lower priority, as resources permit: metrics and detailed notifications are implemented.

Use case xy:

- Not only different Architectures are supported, but also different base distributions.

Use case “openSUSE-at-home�? (minimum implementation):

- no virtualisation of build hosts

- no scheduler (as the developer is also admin and knows his dependencies)

- just a build script like y2pm-build

Use case „private“:

- User (Customer, “Packman�?, ...) gets a dedicated build server, possibly hosted by developer services, and offering free accounts.

Difficult to plan, but later use cases could contain these features:

- User can build packages also for non-Linux systems (BSD, Windows even?)

- We aim at the broadest possible user base and package base. “Have your package build at openSUSE, and you get binaries for SUSE, Debian, Fedora, Windows, Mac, ....�?

- The end users sees one (mirrored) repository of all openSUSE packages (?)

- We link with well known developer repositories like sf.net – a button “submit to openSUSE�? triggers rebuilds with the latest sources.

Published with first milestones

We see the following alternatives:

- only Web-UI+CLI,

- everything except the scheduler

- really everything

- only a few random components

- no source, only docs and specs, ...

If there are non-published parts, how shall we respond contributors who would want to work on these non-published parts?

Possible reasons for keeping parts unpublished

- Novell may want to force us closed source now and sell it as a commercial product later.

- Prevent that others install a copy.

We reached consensus that full open source is to be preferred.

Discussion of Packager

- Do we really need a OPM? („OpensusePackageManager“ format?) At this time: no.

- Do we support .deb packages? Would we provide a “windows binary build service�?? For stage 1: no. Other Linux based distributions can follow later. Windows: much later

- At this time we don't allow upload of binary RPMs

Requirements:

- There are internal packages which are required for building a distribution – but they are never given outside (like package lame for example., which is involved in building openSUSE but not included for legal reasons). This must be fixed for openSUSE.

- QualityManagement

- Our automatic build checks should also be on the buildserver. Make check: Every check, which can produce errors, need a (Wiki-)Homepage with a good explanation. So developers not only get a “package build failed�? but also a reason why and perhaps a solution to avoid this error (thats what openSUSE stands for: High-Quality-Packages!). So we want a “standard set�? of checks – but a developer should decide for each of his package if one (or all) of these checks should be done. So a good developer turns on every check – and packages from a bad developer only passes a few checks.

- What about unresolvable dependencies? These could be checked automatically if a complete dependency analysis is done by the platform-layering. Adrian wants an „easy mode“, which warns an uploader, if a package could not be build because of internal conflicts.

- trust, voting, isn't decided yet => maybe we should have a look at the “sourceforge-trust concept�??

- bugtracking? yes. (see above)

- A "new package proposal commission" is not proclaimed. Project admins do this, when merge, submit.

ToDo-List

- We need a good description of the command line client

- Define a detailed time-line, when to publish what.

- Create a team-site in openSUSE wiki

- Announce a roadmap (internal and external)?

- API definition for the build frontend and 'the buildserver - diagram' is approved by Adrian, everything else needs clarification with Eric. AI: adrian.

- What is the coherence between gpg-keys and trust levels?

- Define a rating system

- Where is the hardware? (AI: adrian) At this time we still have some computers in our server room. Minimal requirement: 4 computers: 1 for the web frontend, 1 for the rest (build-frontend, source- and RPM-repository), 2 as Build-Hosts

- What is the appearance of the web-GUI for „downloader“ and „packager“?

- clear the bugzilla- and wiki-integration also

- What are the download-path names for the the repositories? (YaST2-Installation Source... => Repo-Interface-Client)

- Docu: at this time jw and lrupp are responsible for the internal docu and will act as contact persons

- Involving more developers: openSUSE has first priority! Everyone can work for openSUSE if required. Therefore: ask people if you need help. Talk to sp if necessary, to free the needed resources.

- All code is hosted on svn.suse.de as project (AI: adrian, mschaefer). The whole code will be hosted there, directory structures will change later, if needed.

- Internal documentation is available on w3d.suse.de (AI:jw; docu sources are stored in svn.suse.de). bugzilla.novell.com needs a new project “buildservices�? (AI: freitag).

- The mailing-list is devel-ftl@suse.de ; IRC (if necessary): devel-ftl

- Everything regarding to xml-interfaces and the DTD: AIs: mls and cornelius

- GUIs, Webinterface AI: freitag

- First layout for Webfrontend AI: skh

- Feature whitepaper in December. „We provide a service thought�?: Let's put our abstract imaginations on www.opensuse.org and ask the community to add their wishes. AI for whitepaper: mls; AI wiki: henne

- Technical user docu should be placed in wiki.

- Meeting: approximately every 2 weeks. 15minutes standup-meetings whenever possible. AI: freitag

- Principal topic: decentralization

- detailing / architecture-review

- What about third-party involvement? (catchwords: IBM, Java, ...) Would there be a BuildService outside openSUSE => Requirement from third-party:new product BuildServer?