Open-iSCSI and SUSE Linux

This is a small description on how to get the open-iscsi initiator to work on SUSE Linux. The software technology is pretty new, so not many findings has been documented.

This document describes how to build, install and modify Open-iSCSI for use with SUSE Linux. I do not guarantee this to be "the solution", but at least a guide to get started.

I do not offer any extended help or support, for that I strongly recommend that you join the opensuse-factory mailing list and the open-iscsi mailing list

İçindekiler

- 1 Introduction

- 2 Prerequisites

- 3 Basic installation

- 4 Prepare your system

- 5 Build and install open-iscsi

- 6 Start up and check functionality

- 7 Configure the node

- 8 Checking session data

- 9 Disconnecting target(s)

- 10 Automatic start and volume mounting

- 11 Automatic dismount and stop

- 12 Managing with YaST2

- 13 Connecting to iSCSI with YaST2

Introduction

I've been wanting to use iSCSI for some time, and the base package of open-iscsi that was included in SUSE Linux 10.1 looked interesting. However, it just did not work. So, I started to find out why, and after many hours of testing, debugging and swearing, it now seems stable enough to experiment with. Some issues are still to be solved, more on that later.

Copyright and License

Copyright (c) 2006 by Anders Norrbring / Norrbring Consulting

Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.1 or any later version published by the Free Software Foundation; with no Invariant Sections, no Front-Cover Texts, and with no Back-Cover Texts.

Translations

If you know of any translations for this document, or you are interested in translating it, please email me at anders AT norrbring DOT se.

Acknowledgments

I've had much help to figure things out, here are just a couple of those who has contributed most to these findings

- Hannes Reinecke (SUSE)

- Mike Christie (Open-iSCSI project)

- Ming Zhang (iSCSI Enterprise Target project)

Feedback

Find something wrong with this document? (Or perhaps something right?) I would love to hear from you. Please email me at anders AT norrbring DOT se

Revision history

(If you edit/update this document, please make your entry here!)

| Revision | Date | Signature |

|---|---|---|

| 1.4 | 2006-06-25 | S.M. |

| Added minor note for kernel 2.6.16.13-4-smp build error. | ||

| 1.3 | 2006-05-30 | A.N. |

| Started addition of chapter 12. | ||

| 1.2 | 2006-05-30 | A.N. |

| Edited TOC and fixed some smaller errors. | ||

| 1.1 | 2006-05-28 | A.N. |

| Updated to match open-iscsi svn r593 | ||

| 1.0 | 2006-05-23 | A.N. |

| First public release | ||

Prerequisites

The base platform to use with this how-to is of course a working setup of SUSE Linux... More precisely, you will need the following

- Installed SUSE Linux 10.1 system.

- Access to SUSE installation CDs/DVD or online installation source.

- Above new-to-Linux knowledge.

- A functional and online iSCSI target.

- Knowledge of your systems.

Distribution News

Keep your system updated, you need at least kernel 2.6.16 on your SUSE to run this successfully.

The initial document was based on open-iscsi svn release 571. Beware that things change all the time, and will be reflected in the revision history at the top of this document as they are added to the document.

Basic installation

Here I describe what you need to have installed, and how and when to install it.

SUSE Linux 10.1

This is, of course, the first thing to install, you can have anything from a minimal installation to a full blown graphical environment, we'll go through all steps anyway. Since quite a few of these steps involve handling of kernel modules I recommend that you either login as root, or su to root.

|

(Warning) You are root! Being logged in as root, or su-ing to root makes you a potential dangerous user! Please do take your time to think before entering commands. You may accidentaly erase your whole system, or make it inoperable and useless. |

Additionally needed SUSE packages

The easiest way to install these additional packages is of course by using SUSE's YaST2 package manager, if you prefer to install all packages and it's dependancies manually, feel free to do it, but it's beyond the scope of this document.

|

(Important) We will not use the open-iscsi package provided by SUSE on your installation media (or online source). This simply beacuse if we install the RPM provided, your online update will overwrite our version when SUSE release a newer version on the update servers. Of course a newer release may be fully functional, but for now, this is the easiest way to avoid a mess. Also be aware of that the kernel distributed with SUSE Linux includes the kernel space modules from open-iscsi, but unfortunately not all of them, and not up-to-date versions. This means that if you update your kernel, your compiled modules will be overwritten, and render your iSCSI system unusable. |

If you're using KDE or Gnome, click your way to start YaST2, then choose Software+Software Management to start the package chooser. If you're running in runlevel 3 and use the ncurses (text based) version, issue the yast2 sw_single command to get the manager started.

Install using the YaST2 utility

- Click the Filter drop-down box, and select Selections .

- Select the C/C++ Compiler and Tools selection.

- Change filter and either look manually, or use the search function.

- Select the kernel-source package.

- Select the db-devel package.

- Select the patch package.

- Accept and install the selected packages.

Download the open-iscsi software

We pull this directly from the open-iscsi project's svn repositary. The packages available in either rpm or tar.gz format are simply not updated to be useful.

- If you're using X, open a terminal window.

- Change working directory to your sources directory, usually /usr/src: cd /usr/src

- We use the svn command to download the source code from the repositary. If you want to learn more about svn, use man svn. The open-iscsi repositary is located on Berlios' servers. To check out the files, issue the command svn co svn://svn.berlios.de/open-iscsi/

You should now see something like this happen on your screen (Only first and last lines showed)

labb:/usr/src # svn co svn://svn.berlios.de/open-iscsi/ A open-iscsi/test A open-iscsi/test/regression.dat A open-iscsi/test/regression.sh A open-iscsi/test/README (...) A open-iscsi/etc/initd/initd.redhat A open-iscsi/Makefile A open-iscsi/README Checked out revision 593. labb:/usr/src #

IIf this does not happen, please check that you have svn installed, it should be together with the C/C++ Compiler and Tools you installed in the previous step. Also check for changes to the project repositary at http://developer.berlios.de/projects/open-iscsi/.

Prepare your system

To be able to compile the open-iscsi modules, we need to prepare the kernel source.

- Change working directory to your kernel sources directory, usually /usr/src/linux: cd /usr/src/linux

- When building external modules the kernel is expected to be prepared. This includes the precense of certain binaries, the kernel configuration and the symlink to include/asm. We will make clone of the current kernel configuration, and prepare the modules for compilation of external modules. To do this, issue the command: make cloneconfig && make modules_prepare

- You should now see a long screen output scroll away in front of you, like this

labb:/usr/src/linux # make cloneconfig && make modules_prepare Cloning configuration file /proc/config.gz Linux Kernel Configuration Code maturity level options Prompt for development and/or incomplete code/drivers (EXPERIMENTAL) [Y/n/?] y General setup (...) HOSTLD scripts/mod/modpost HOSTCC scripts/kallsyms HOSTCC scripts/conmakehash HOSTCC scripts/bin2c labb:/usr/src/linux #

Your system is now prepared for compilation of the open-iscsi package!

Build and install open-iscsi

Building the open-iscsi modules and applications is pretty straight-forward, but I've noticed that a small change to the init script has to be made on several systems while other can do without it.

Prepare the open-iscsi source code

This is true if you use an erlier version than svn r593

- Open up your favorite text editor and load the file Makefile located in the base open-iscsi directory (/usr/src/open-iscsi)

- Locate the line exec_prefix = /usr and change it to look like this:

DESTDIR ?= prefix = /usr exec_prefix = / sbindir = $(exec_prefix)/sbin bindir = $(exec_prefix)/bin mandir = $(prefix)/share/man

Save the file and exit your editor.

|

(Note) The following step is optional, we can do it afterwards as well. |

You can choose to make the change after installation with your favorite text editor, or you can use the small patch I've prepared.

- The patch way.. It's simple, the patch is this:

--- etc/initd/initd.suse.old 2006-05-24 13:57:23.000000000 +0200

+++ etc/initd/initd.suse 2006-05-24 16:00:34.000000000 +0200

@@ -35,6 +35,7 @@

iscsi_login_all_nodes()

{

+ sleep 4

TARGETS=$($ISCSIADM -m node 2> /dev/null | sed 's@\[\(.*\)\] .*@\1@g')

for rec in $TARGETS; do

You can also download it from here (Right click and save as).

- Go to the open-iscsi source directory cd /usr/src/open-iscsi

- To apply the patch, enter patch --verbose -p0 < /path-to/initd.patch and watch it happen:

labb:/usr/src/open-iscsi # patch --verbose -p0 < initd.patch Hmm... Looks like a unified diff to me... The text leading up to this was: -------------------------- |--- etc/initd/initd.suse.old 2006-05-24 13:57:23.000000000 +0200 |+++ etc/initd/initd.suse 2006-05-24 16:00:34.000000000 +0200 -------------------------- Patching file etc/initd/initd.suse using Plan A... Hunk #1 succeeded at 35. done

Compile open-iscsi

This step is really simple. Go to the open-iscsi source directory cd /usr/src/open-iscsi, then simply issue the make command, watch it compile..labb:/usr/src/open-iscsi # make make -C usr make[1]: Entering directory `/usr/src/open-iscsi/usr' cc -O2 -g -Wall -Wstrict-prototypes -I../include -DLinux -DNETLINK_ISCSI=8 -c -o util.o util.c cc -O2 -g -Wall -Wstrict-prototypes -I../include -DLinux -DNETLINK_ISCSI=8 -c -o io.o io.c (...) make[1]: Leaving directory `/usr/src/open-iscsi/kernel' Compilation complete Output file ----------------------------------- ---------------- Built iSCSI Open Interface module: kernel/scsi_transport_iscsi.ko Built iSCSI library module: kernel/libiscsi.ko Built iSCSI over TCP kernel module: kernel/iscsi_tcp.ko Built iSCSI daemon: usr/iscsid Built management application: usr/iscsiadm Read README file for detailed information.

| Version: 10.1

|

On kernel version 2.6.16.13-4-smp you may receive an "UNSUPPORTED KERNEL" message when running make. Update your /lib/modules/2.6.16.13-4-smp/build/Makefile and set SUBLEVEL to 16

|

Install the open-iscsi modules and apps

This is almost as simple as the build itself.

- Issue the make install command.

labb:/usr/src/open-iscsi # make install make -C kernel install_kernel make[1]: Entering directory `/usr/src/open-iscsi/kernel' (...) install -m 755 etc/initd/initd.suse \ /etc/init.d/open-iscsi make[1]: Leaving directory `/usr/src/open-iscsi'

- Set the kernel module dependencies, issue the depmod -aq command, it takes a couple of seconds to complete.

Edit the start/stop script

|

(Note) Jump over this step if you applied the patch above! |

If you didn't apply the patch above, you should edit the open-iscsi start/stop script to introduce a small delay in service startup, otherwise the connections may not be restored at boot time.

To do this, start up your favorite text editor and open the file /etc/init.d/open-iscsi

Look up the following (It's just below the init part):

iscsi_discovery()

{

$ISCSIADM -m discovery --type=$ISCSI_DISCOVERY --portal=$ISCSI_PORTAL > /dev/null

}

iscsi_login_all_nodes()

{

TARGETS=$($ISCSIADM -m node 2> /dev/null | sed 's@\[\(.*\)\] .*@\1@g')

for rec in $TARGETS; do

STARTUP=`$ISCSIADM -m node -r $rec | grep "node.conn\[0\].startup" | cut -d' ' -f3`

NODE=`$ISCSIADM -m node -r $rec | grep "node.name" | cut -d' ' -f3`

if [ $STARTUP = "automatic" ] ; then

echo -n "Logging into $NODE: "

$ISCSIADM -m node -r $rec -l

rc_status -v

fi

done

}

And in the functioniscsi_login_all_nodes() we should add a line with sleep 4 for the delay, like this:

iscsi_login_all_nodes()

{

sleep 4

TARGETS=$($ISCSIADM -m node 2> /dev/null | sed 's@\[\(.*\)\] .*@\1@g')

Save the file and exit your editor.

Add the sysconfig entry

We also need to add an entry for the sysconfig.

- Open your text editor, and create the file: /etc/sysconfig/open-iscsi

- In the file opened for editing, enter the following:

## Path: Network/iSCSI/Client ## Description: iSCSI Default Portal ## Type: string ## Default: "" # # The iSCSI Default Portal to use on startup. Use either the hostname # or IP number of the machine providing the iSCSI Targets to use # ISCSI_PORTAL="" ## Type: list(sendtargets,isns,slp) ## Default: sendtargets # # The iSCSI discovery method to use. Currently only 'sendtargets' is # implemented. # ISCSI_DISCOVERY="sendtargets"

Then save the file, and exit your editor. You can also download it from here (Right click and save as).

Add the initiatorname config file

We must have a config file with the initiator name for the daemon to use, without this, it cannot start.

- You need to create a name for your initiator, and the name has to follow certain standards. You can read about iSCSI naming in IETF RFC 3721. To make it a bit easier for you, I'll quote the essentials here.

1.1. Constructing iSCSI names using the iqn. format

The iSCSI naming scheme was constructed to give an organizational

naming authority the flexibility to further subdivide the

responsibility for name creation to subordinate naming authorities.

The iSCSI qualified name format is defined in [RFC3720] and contains

(in order):

- The string "iqn."

- A date code specifying the year and month in which the

organization registered the domain or sub-domain name used as the

naming authority string.

- The organizational naming authority string, which consists of a

valid, reversed domain or subdomain name.

- Optionally, a ':', followed by a string of the assigning

organization's choosing, which must make each assigned iSCSI name

unique.

The following is an example of an iSCSI qualified name from an

equipment vendor:

Organizational Subgroup Naming Authority

Naming and/or string Defined by

Type Date Auth Org. or Local Naming Authority

+--++-----+ +---------+ +--------------------------------+

| || | | | | |

iqn.2001-04.com.example:diskarrays-sn-a8675309

Where:

"iqn" specifies the use of the iSCSI qualified name as the

authority.

"2001-04" is the year and month on which the naming authority

acquired the domain name used in this iSCSI name. This is used to

ensure that when domain names are sold or transferred to another

organization, iSCSI names generated by these organizations will be

unique.

"com.example" is a reversed DNS name, and defines the

organizational naming authority. The owner of the DNS name

"example.com" has the sole right of use of this name as this part

of an iSCSI name, as well as the responsibility to keep the

remainder of the iSCSI name unique. In this case, example.com

happens to manufacture disk arrays.

"diskarrays" was picked arbitrarily by example.com to identify the

disk arrays they manufacture. Another product that ACME makes

might use a different name, and have its own namespace independent

of the disk array group. The owner of "example.com" is

responsible for keeping this structure unique.

"sn" was picked by the disk array group of ACME to show that what

follows is a serial number. They could have just assumed that all

iSCSI Names are based on serial numbers, but they thought that

perhaps later products might be better identified by something

else. Adding "sn" was a future-proof measure.

"a8675309" is the serial number of the disk array, uniquely

identifying it from all other arrays.

Despite what you read above, the naming schema gives you a bit of freedom. Let's break it down a little and take a look at the following, perfectly legal, initiator name. iqn.2000-05.net.anything:01.189fbf1f2ac1

iqn is static, it must be there.

2000-05 is the year and month where the domain name below became valid.

net.anything the domain name in "reverse order", the domain here is anything.net..

:01 is the initiator number.

189fbf1f2ac1 is an arbitrary value to make the name unique.

- Open your text editor, and create the new file: /etc/initiatorname.iscsi

- In the file opened for editing, enter the following, but use your own, unique initiator name!:

## DO NOT EDIT OR REMOVE THIS FILE! ## If you remove this file, the iSCSI daemon will not start. ## If you change the InitiatorName, existing access control lists ## may reject this initiator. The InitiatorName must be unique ## for each iSCSI initiator. Do NOT duplicate iSCSI InitiatorNames. InitiatorName=iqn.2000-05.net.anything:01.189fbf1f2ac1

Then save the file, and exit your editor.

Create the database directory

open-iscsi needs its database to keep track of targets and sessions, the default location is /var/db/iscsi which does not exist on a SUSE system. So, we'll create it before attempting to use the apps.

Give the command: mkdir -p /var/db/iscsi

Finish the installation

To make the daemon start and stop more SUSE-like, we'll add a symlink for the start and stop script.

Those symlinks are stored in /sbin, so we issue the command: ln -s /etc/init.d/open-iscsi /sbin/rcopen-iscsi

Let's go configure and test things!

Start up and check functionality

Now we should be ready to test that everything went well.

Test to load the modules and daemon

The easiest way is simply to issue the command: rcopen-iscsi start

That should be followed by this screen output:

labb:~ # rcopen-iscsi start Starting iSCSI initiator service: done labb:~ #

To double check, give the command: rcopen-iscsi status

Which should produce the following

labb:~ # rcopen-iscsi status Checking for iSCSI initiator service: running labb:~ #

The daemon process puts its log entries in the syslog, you can check by simply: cat /var/log/messages

In there you should see something like this if the load was successful:

May 24 21:07:29 labb kernel: scsi_transport_iscsi: no version for "struct_module" found: kernel tainted. May 24 21:07:29 labb kernel: scsi_transport_iscsi: module not supported by Novell, setting U taint flag. May 24 21:07:29 labb kernel: libiscsi: module not supported by Novell, setting U taint flag. May 24 21:07:29 labb kernel: iscsi_tcp: module not supported by Novell, setting U taint flag. May 24 21:07:29 labb kernel: iscsi: registered transport (tcp) May 24 21:07:29 labb iscsid: iSCSI logger with pid=2893 started! May 24 21:07:30 labb iscsid: iSCSI daemon with pid=2894 started! May 24 21:07:30 labb iscsid: version 1.0-574 May 24 21:07:30 labb iscsid: iSCSI sync pid=2895 started

Test discovery and connectivity

The first thing you need to check at this stage is that your target is in fact up and running...

The tool used to discover, connect, edit and delete targets from the initiator is the iscsiadm (See man iscsiadm)

- To check for the target (discovery) and add it to the initiator database, we of course need to know the target's IP address and iSCSI port (standard is TCP port 3260). Let's check for our target that is located at IP 192.168.111.11 by giving the command: iscsiadm -m discovery -t st -p 192.168.111.11

That should render an output similar to this:

labb:~ # iscsiadm -m discovery -t st -p 192.168.111.11 [a3f400] 192.168.111.11:3260,1 iqn.2006-05.net.anything:store0

That means we have found our target! The target in this case presents itself as iqn.2006-05.net.anything:store0, a syntax you know since the name configuration above, and from configuring the target (if you did that..).

- If you want to see that the target was actually saved to the initiator database, just issue the command: iscsiadm -m discovery

You should then see something like the below if the target was successfully added to the database:

labb:~ # iscsiadm -m discovery [71be71] 192.168.111.11:3260 via sendtargets

Here the [71be71] part is the resource id used in other actions involving the discovery method. (See man iscsiadm)

- You can also check the initiator node database by issuing the command: iscsiadm -m node

You should then see something like the below if the target was successfully added to the database:

labb:~ # iscsiadm -m node [a3f400] 192.168.111.11:3260,1 iqn.2006-05.net.anything:store0

Here the [a3f400] part is the resource id used in other actions involving the node method. (See man 8 iscsiadm) You also see the target's iqn name, discussed above.

- To see the node data stored in the database, the node parameters, we use the same syntax as above, and add the node record, like command: iscsiadm -m node -r a3f400

All the related node data is then listed on screen, We'll learn how to edit them later

labb:~ # iscsiadm -m node -r a3f400 node.name = iqn.2006-05.net.anything:store0 node.transport_name = tcp node.tpgt = 1 node.active_conn = 1 node.startup = manual node.session.initial_cmdsn = 0 node.session.auth.authmethod = None node.session.auth.username = <empty> node.session.auth.password = <empty> node.session.auth.username_in = <empty> node.session.auth.password_in = <empty> node.session.timeo.replacement_timeout = 120 node.session.err_timeo.abort_timeout = 10 node.session.err_timeo.reset_timeout = 30 node.session.iscsi.InitialR2T = No node.session.iscsi.ImmediateData = Yes node.session.iscsi.FirstBurstLength = 262144 node.session.iscsi.MaxBurstLength = 16776192 node.session.iscsi.DefaultTime2Retain = 0 node.session.iscsi.DefaultTime2Wait = 0 node.session.iscsi.MaxConnections = 0 node.session.iscsi.MaxOutstandingR2T = 1 node.session.iscsi.ERL = 0 node.conn[0].address = 192.168.111.11 node.conn[0].port = 3260 node.conn[0].startup = manual node.conn[0].tcp.window_size = 524288 node.conn[0].tcp.type_of_service = 0 node.conn[0].timeo.login_timeout = 15 node.conn[0].timeo.auth_timeout = 45 node.conn[0].timeo.active_timeout = 5 node.conn[0].timeo.idle_timeout = 60 node.conn[0].timeo.ping_timeout = 5 node.conn[0].timeo.noop_out_interval = 0 node.conn[0].timeo.noop_out_timeout = 0 node.conn[0].iscsi.MaxRecvDataSegmentLength = 65536 node.conn[0].iscsi.HeaderDigest = None node.conn[0].iscsi.DataDigest = None node.conn[0].iscsi.IFMarker = No node.conn[0].iscsi.OFMarker = No

This all means that we can reach the target, it responds correctly, and can be used with our system. This is good sign.

Connect and establish a session

To establish a session with our target we need to login to it. For ease of following the process, I'll use a target with no login and authentication details. We'll look into editing such details later on.

The default setting when adding a new target in the discovery and node database is manual login, that simply means that we need to establish the session manually on the command line. If it was set to automatic (we'll do this later as well), the target session would be established as soon as the iscsid daemon starts up.

In this example, we issue the command: iscsiadm -m node -r a3f400 -l to login to our discovered target. There will not be any output from this command if it's successful, so we need to either check for udev, or much easier, the kernel buffer. Issue the command dmesg and you should see something like this:

scsi8 : iSCSI Initiator over TCP/IP, v1.0-574 Vendor: IET Model: VIRTUAL-DISK Rev: 0 Type: Direct-Access ANSI SCSI revision: 04 SCSI device sdb: 976830464 512-byte hdwr sectors (500137 MB) sdb: Write Protect is off sdb: Mode Sense: 77 00 00 08 SCSI device sdb: drive cache: write back SCSI device sdb: 976830464 512-byte hdwr sectors (500137 MB) sdb: Write Protect is off sdb: Mode Sense: 77 00 00 08 SCSI device sdb: drive cache: write back sdb: sdb1 sd 8:0:0:0: Attached scsi disk sdb sd 8:0:0:0: Attached scsi generic sg1 type 0

This means that iSCSI has set up a session and connected the iSCSI target device. In this case, a 500GB volume with one partition. On this system, it's named sdb and the partition is sdb1.

Of course you can validate these facts by entering fdisk -l /dev/sdb which will list the existing partition(s) and exit.

|

(Warning) Partition editors are risky! Be aware that you are able to mess up the target really bad if you make fdisk mistakes! You ARE online with the target, and you ARE using the fdisk partition editor! If you use fdisk interactively, just view the partition table and then exit. That would be enough to verify that things are okay. |

It's beyond the scope if this document to teach you how to set up, edit and handle partitions and file systems. You can do this either by using manual tools, or YaST2, either way, your iSCSI target volume will appear as any normal SCSI disk in your system.

|

(Note) Despite that your iSCSI volume in general behaves just like a locally attached disk, there are quite some differencies, it cannot be normally mounted in /etc/fstab because you need networking first, and some other things. I'll address these issues later. |

Configure the node

There are several parameters for each node that might be necessary to change to get a practically functional system. The most obvious ones are startup and authentication. Most targets in a corporate environment use authentication to identify which initiators may establish a session.

Many systems also want the iSCSI target to be available at all times, and also have a mounted file system without manual intervention.

In this section we'll take a look on these matters. The node configurations are stored in the open-iscsi database, and they're not editable directly, the data is stored in Berkeley DB format. Therefore we'll use the iscsiadm command line tool.

We'll assume that we have the node resource id a3f400 from the testing above, of course this will be different on your system.

Set the node to autostart

To have the open-iscsi modules to automatically connect and log in to the target node when the daemon starts, we need to make the system aware of that the node session should be automatically established.

|

(Note) The setting of manual or automatic session establishment is individual for each and every node. Setting one node session to auto will not effect any other node in the database. You must configure each one individually! |

- First we look at what parameters the node currently has set. (See Node parameters above).

node.conn[0].startup = manual

- To set it to automatic, we use the iscsiadm tool with the operation method. For this to work, we need to know the resource id (a3f400), which parameter name (node.conn[0].startup) to edit, and what value (automatic)to set.

- Looking in the man page, we quickly find out that we should issue the command:

iscsiadm -m node -r a3f400 -o update -n node.conn[0].startup -v automatic

A quick check with the command iscsiadm -m node -r a3f400 gives that the automatic start was successfully set:

labb:~ # iscsiadm -m node -r a3f400 (...) node.conn[0].address = 192.168.111.11 node.conn[0].port = 3260 node.conn[0].startup = automatic (...)

- To verify the automatic function, issue the command: rcopen-iscsi restart and watch the result

labb:~ # rcopen-iscsi restart Stopping iSCSI initiator service: done Starting iSCSI initiator service: done Logging into iqn.2006-05.net.anything:store0: done

|

(Note) Because we have added the sleep 4 statement into our /etc/init.d/open-iscsi script, you'll notice that there's a slight delay between the "Starting" and the "Logging into" lines. It has shown that most syatems I've tested this on does not successfully log in automatically at boot time without the delay. |

Set node login details

If your target use authentication you must of course set the initiator to login with the correct authentcation method, username and password. Currently supported authentication methods are "None" and "CHAP".

To make life a little more complicated, iSCSI has two authentications, in and out. To explain it simple from the initiator's point of view, the out is from the initiator to the target. Then the in authentication is the target's authentication to the initiator. The two usernames and passwords can be completely different.

In this example, I will show you the out authentication only, the in works in the same way.

|

(Tip) Most Cisco Systems targets use "CHAP" as default whereas Hewlett Packard use "None". |

Let us draw the scenario, as shown before in Node parameters, we do not have anything set at this experimental node:

node.session.auth.authmethod = None node.session.auth.username = <empty> node.session.auth.password = <empty> node.session.auth.username_in = <empty> node.session.auth.password_in = <empty>

The target is now edited to require authentication from the initiator, the out authentication from this point of view. We want to use a username of "john" and the password "123pass". We also want to use the authentication method "CHAP".

Again, we use the iscsiadm tool to change the parameters in the database, one parameter at a time, there is no support for changing several at once. The process should look like this:

labb:~ # iscsiadm -m node -r a3f400 -o update -n node.session.auth.username -v john labb:~ # iscsiadm -m node -r a3f400 -o update -n node.session.auth.password -v 123pass labb:~ # iscsiadm -m node -r a3f400 -o update -n node.session.auth.authmethod -v CHAP labb:~ # iscsiadm -m node -r a3f400 node.name = iqn.2006-05.net.anything:store0 node.transport_name = tcp node.tpgt = 1 node.active_conn = 1 node.startup = manual node.session.initial_cmdsn = 0 node.session.auth.authmethod = CHAP node.session.auth.username = john node.session.auth.password = ******** node.session.auth.username_in = <empty> node.session.auth.password_in = <empty>

you can see, the parameters are changed, and for security the password is obscured by using stars.

From open-iscsi svn r591 you can now use the -S option to view the password for the node, simply by querying the database as normal, but adding the "-S". Like this: iscsiadm -m node -r a3f400 -S which would produce this instead:

(...) node.session.auth.authmethod = CHAP node.session.auth.username = john node.session.auth.password = 123pass node.session.auth.username_in = <empty> (...)

If you enter either parameter incorrectly so that it doesn't match the settings on the target, you will of course experience login errors, like this:

labb:~ # iscsiadm -m node -r a3f400 -l iscsiadm: initiator reported error (5 - encountered iSCSI login failure)

Editing other parameters

There are lots of other parameters that can be set, among them data transfer optimizations, time-outs etc. All parameters are set in the same fashion as described above, one parameter at a time.

Checking session data

You can see active sessions by issuing the command iscsiadm -m session , like

labb:~ # iscsiadm -m session [08:e41c74] 192.168.111.11:3260,1 iqn.2006-05.net.anything:exp [00:a3f400] 192.168.111.11:3260,1 iqn.2006-05.net.anything:store0

This tells us that there are two active sessions. In plain English that means we have connected to two targets (or volumes).

To see detailed statistics for a node, issue the command iscsiadm -m session -r 00:a3f400 --stats which in this case presents us with the following information:labb:~ # iscsiadm -m session -r 00:a3f400 --stats

[00:a3f400] 192.168.111.11:3260,1 iqn.2006-05.net.anything:store0

iSCSI SNMP:

txdata_octets: 2276

rxdata_octets: 118736

noptx_pdus: 0

scsicmd_pdus: 37

tmfcmd_pdus: 0

login_pdus: 0

text_pdus: 0

dataout_pdus: 0

logout_pdus: 0

snack_pdus: 0

noprx_pdus: 0

scsirsp_pdus: 37

tmfrsp_pdus: 0

textrsp_pdus: 0

datain_pdus: 42

logoutrsp_pdus: 0

r2t_pdus: 0

async_pdus: 0

rjt_pdus: 0

digest_err: 0

timeout_err: 0

iSCSI Extended:

tx_sendpage_failures: 0

rx_discontiguous_hdr: 0

eh_abort_cnt: 0

Disconnecting target(s)

The matter of disconnecting from a target is really simple, just use one of two methods.

One node at a time

Use the command iscsiadm -m node -r a3f400 -u and you're done. You must of course use the appropriate resource id instead of my example id.

By stopping the daemon

You can also stop the daemon, the effect is that all nodes are logged out and all session are closed. Just use rcopen-iscsi stoplabb:~ # rcopen-iscsi stop Logging out from iqn.2006-05.net.anything:exp: done Logging out from iqn.2006-05.net.anything:store0: done Stopping iSCSI initiator service: done

Automatic start and volume mounting

Automatic starting of the daemon is really straight-forward since the provided start/stop script is suited for SUSE Linux. Mounting a volume connected via iSCSI on the other hand is not.

In the section I'll talk you through it, but I strongly recommend that you read up on the functions used to get an understanding of how this work.

Automatic start of the daemon

This is simple since the script provided is already in place and functional. The script is set to start the daemon in runlevel 3 and 5, any other level would just be silly since we must have networking up to make use of iSCSI. You can set it to auto start in two ways:

- The chkconfig way:

- Activate auto start: chkconfig open-iscsi on

- Inactivate auto start: chkconfig open-iscsi off

- The insserv way:

- Activate auto start: insserv open-iscsi

- Inactivate auto start: insserv -r open-iscsi

This will also make the daemon stop when you go to another runlevel. Like reboot, halt or single user mode. In short, all runlevels that doesn't have networking.

Automatic mounting

This is the tricky part. If you enter the volume as usual in your fstab, either manually or with the YaST2 disk module, the system will try to mount the volume at boot before networking is up. That will of course not work since iSCSI volumes are located on the network.

|

(Note) For this example we assume that the iSCSI target volume is already partitioned and has a file system on it. How to do that is beyond the scope of this document, it's basic system knowledge. |

If you check the man pages for the mount command, you can read things that gives us hope, like the _netdev option to put in fstab. However, that option doesn't have any effect at all in SUSE Linux since the distribution has no netfs script anymore. Instead, SUSE steers the users to use the hotplug functions controlled by the HAL system.

It doesn't stop there, since the volume is mounted dynamically and its device name link (like /dev/sda or /dev/sdc) may very well change if some other hotplug volume is mounted in another order than the one you used while setting this one up, we must use another way of identifying the volume.

The way we are going to use now is to identify the volume's partition(s) via its UUID (Universal Unique Identifier) which is static for the partition's life time, and it's also unique for it.

Finding out the partition's UUID can be a little tricky, you first have to find out the device name that the attached volume has on the system, as mentioned just above, that name can change.

You can accomplish this task in several ways, I'll list a few of them. This is a 2-3 step operation:

Find out the volume's device name

First you need to look up the volume's device name on the system, here are a couple of ways to do it.

- You can do it by trial-and-error with your favourite partion editor. Obviously no good.

- You can fire up YaST2's disk utility (yast2 disk ) and look for it. Not that bad...

|

(Warning) Partition editors are risky! Be aware that you are able to mess up the target really bad if you make mistakes in yast2&disk! You ARE online with the target, and you ARE using the yast2 partition editor! Just view the partition table and then exit. That would be enough to see the device name. |

- You can check the messages open-iscsi wrote in your syslog, like shown before. Obviously messy with a huge syslog.

- By listing the contents of the /dev directory and look for it. Plain stupid...

- You can look up the device with hwinfo --scsi --short which in my case returns:

labb:~ # hwinfo --scsi --short disk: /dev/sda MegaRAID LD0 RAID1 8677R /dev/sdb IET VIRTUAL-DISK

Not bad at all, from the output I quickly identify the iSCSI disk by its vendor, in this case IET (iSCSI Enterprise Target) which is a free software based target. (Project page at SourceForge.net) IET is released under the GNU General Public License. Great! Now we know for sure that our volume is named /dev/sdb it this point in time.

|

(Note) Dynamic volumes Again, be aware of the nature of dynamic device naming. The name may change for a number of reasons.

|

Find out what UUID(s) the volume's partition(s) has

We now need to find out what partition(s) the volume has, and most important, the UUID for the partition(s) on this volume that we want to mount. This can also be made in a couple of ways. Most of them involves a couple of steps:

Find the partition(s) device name(s)

First we need to know how many partitions the volume has, and also the partition device names. These names are needed to find out the partition UUID in step two.

- By looking for the open-iscsi messages in your syslog after it has established a session. Not good, you won't see file system details and a large syslog is messy to search.

- By your favourite partitioner program, the simplest and most useful output would be from parted by using parted /dev/sdb print to just list existing partitions on the volume.

|

(Warning) Partition editors are risky! Be aware that you are able to mess up the target really bad if you make parted mistakes! You ARE online with the target, and you ARE using the parted partition editor! If you use parted interactively, just view the partition table and then exit. That is the only thing we're looking for now. |

In this example we have only one partition on the connected target volume (sdb).

labb:~ # parted /dev/sdb print Disk geometry for /dev/sdb: 0kB - 500GB Disk label type: msdos Number Start End Size Type File system Flags 1 1kB 499GB 499GB primary xfs type=83

This output gives us the valuable information that we have one partition on the volume, it's a primary partition (number one). This time it carries the device name /dev/sdb1

|

(Note) Dynamic naming Remember, the device name may very well change at next open-iscsi startup! |

We also get the information that this partition has been formatted with the XFS file system, which is a fact we need for the fstab entry. Quite good, we get useful info from this one.

- You can also use the hwinfo tool again, but with another parameter. One vatch with the hwinfo tool to view partitions is that we cannot limit which volume should be listed, it lists all partitions on all available volumes. This can of couse generate uncomfortable amounts of data. Anyway, if we choose this way, we issue the command hwinfo --partition and in this case, we get the following output (irrelevant info left out):

16: None 00.0: 11300 Partition [Created at block.360] Unique ID: 6Kfz.SE1wIdpsiiC Parent ID: R0Fb.GP6DFlNhr+8 SysFS ID: /block/sdb/sdb1 Hardware Class: partition Model: "Partition" Device File: /dev/sdb1 Device Files: /dev/sdb1, /dev/disk/by-id/scsi-1494554000000000000000000010000005c1200000d000000-part1, /dev/disk/by-uuid/612a0ea6-b9fd-4dfb-b954-e39288c348f5, /dev/disk/by-id/edd-_null_-part1 Config Status: cfg=new, avail=yes, need=no, active=unknown Attached to: #15 (Disk)

We locate the correct partition(s) by device file name(s), in this case the only partition Device&File:&/dev/sdb1. A great "side effect" by using this tool is that we get the UUID at the same time, and that is what we're looking for anyway. So, if you use this method, you can skip over Get the UUID of the partition(s) to use

Get the UUID of the partition(s) to use

Now that we have the current device name of the partition (/dev/sdb1 in this example) we can get the device's UUID rather simple. The process is described in your /usr/share/doc/packages/sysconfig/README.storage file if you want to read up on it.

Since hotplugged devices rarely have a persistent device node, you should prefer the persistent links to them. You get a list of all available links to a storage device node with the command: udevinfo -q symlink -n <device node> Like this:

labb:~ # udevinfo -q symlink -n /dev/sdb1 disk/by-id/scsi-1494554000000000000000000010000005c1200000d000000-part1 disk/by-uuid/612a0ea6-b9fd-4dfb-b954-e39288c348f5 disk/by-id/edd-_null_-part1

From this output you see immideately that the /dev/sdb1 partition has the UUID: 612a0ea6-b9fd-4dfb-b954-e39288c348f5.

Figure out the partition's file system

Now we have almost everything we need to make a successful entry into our /etc/fstab to make automatic mounting of the partition work. The missing thing is still the file system on the partition, that is, if you didn't use parted as described above in the parted example Otherwise you have two very easy and useful ways to figure it out:

- By using guessfstype which does just what it say, it make a good guess at what file system is used. Use it like this:

labb:~ # guessfstype /dev/sdb1 /dev/sdb1 *appears* to be: xfs

This is probably the most self-explanatory way, it presents just what we want to see, namely that we have a XFS file system..

- You can also use fsck in a listing way, like this:

labb:~ # fsck -N /dev/sdb1 fsck 1.38 (30-Jun-2005) [/sbin/fsck.xfs (1) -- /dev/sdb1] fsck.xfs -- /dev/sdb1

This variant actually is a "dry-run" of fsck, it shows what it would execute if run in "live" mode. In this case it shows that it would run fsck.xfs which in fact tells us that it's a XFS file system we have on the partition.

Make an fstab entry

Now we have everything we need to mount automatically at boot via /etc/fstab. All we need to do now is to edit the fstab file and enter our entry.

First of all, make a directory to use as the mount point, here we use /mnt/iscsi and make it with the command: mkdir /mnt/iscsi

Fire up your favourite text editor, open the /etc/fstab file and enter the new mount. In this example case, it can look like this:

devpts /dev/pts devpts mode=0620,gid=5 0 0 /dev/fd0 /media/floppy auto noauto,user,sync 0 0 # Mount the iSCSI volume automatically. /dev/disk/by-uuid/612a0ea6-b9fd-4dfb-b954-e39288c348f5 /mnt/iscsi xfs hotplug,rw 0 2

Some explanations may be in place, you can read about them in the man 5 fstab page, but a short refreshment follows here:

- The first field, (fs_spec), describes the block special device or remote filesystem to be mounted.

- The second field, (fs_file), describes the mount point for the filesystem.

- The third field, (fs_vfstype), describes the type of the filesystem.

- The fourth field, (fs_mntops), describes the mount options associated with the filesystem.

- The fifth field, (fs_freq), is used for these filesystems by the dump(8) command to determine which filesystems need to be dumped.

- The sixth field, (fs_passno), is used by the fsck(8) program to determine the order in which filesystem checks are done at reboot time.

We're done! Sit back and enjoy the magic of auomatic mounting.

There may be a nedd for adjusting startup orders of your services if you have something that depends on iSCSI. If you, lets say, want to have a NFS export running on the volume, you must start nfsserver after open-iscsi. This can be solved in several ways, the most obvious would be to enter the dependency in /etc/init.d/nfsserver, but there are several ways to accomplish it.

Automatic dismount and stop

Automatic dismounting

This is still to be figured out. Since the volume is mounted dynamically, I haven't found any obvious way to dismount it if there are other services depending on it. If there are no dependencies, dismount will happen automatically when the daemon stops.

If you have good ideas, please contact me at anders AT norrbring DOT se.

Automatic stop of the daemon

If you have set the service daemon to start automatically, it will also be stopped automatically in the reverse order as it was started. If you want to stop it manually, first dismount any mounted volume(s), then issue the stop command: rcopen-iscsi stop .

Managing with YaST2

With your SUSE Linux 10.1 or SUSE Linux Enterprise Server 10 is included a managing utility for the Open-iSCSI initiator. The module is named iscsi-client.

Installing the YaST2 module

To use this utility you must install it from your installation media first, so fire up your YaST2 software management module, either in X by clicking your way there, or from the command line by entering the command: yast2 sw_single

- In there, do a search for "yast2-iscsi-client", mark it and install.

|

(Important) Do NOT start the installed module yet! When YaST2 start this iSCSI client managing utility, it checks dependenies, and it will find that open-iscsi is not installed. Well, at least it thinks it's not installed. Keep reading |

- Exit the YaST2 system.

Fool the YaST2

Because the dependency check YaST2 performs while starting its modules, we need to ensure that YaST2 thinks that we have installed the open-iscsi package.

It's no big deal really, but it has to be done. If we don't do this, YaST2's open-iscsi module will never run. Well, it will, but it will prompt us every time that is must install the open-iscsi package that we already have installed, but manually. If we let YaST2 install it, all the other work we've done is overwritten and spoiled.

- Look up the open-iscsi rpm file on your installation media (easy on a DVD), on SL10.1 it's located on CD1.

|

(Tip) Look in this path If you use CD (#1) or DVD, the open-iscsi rpm package would be located in /suse/your_arch/ and the name would be open-iscsi-0.5._ver_._arch_.rpm |

- Time to make a fake installation of the package, the fake is just that we update the rpm database so it shows the package as installed, but it doesn't actually install the package. Issue the command: rpm -ivh --justdb /path/to_package/open-iscsi-0.5._ver_._arch_.rpm

Example installation from a ftp source:

labb:~ # rpm -ivh --justdb ftp://ftp.gwdg.de/pub/opensuse/distribution/SL-10.1/inst-source/suse/i586/open-iscsi-0.5.545-9.i586.rpm Retrieving ftp://ftp.gwdg.de/pub/opensuse/distribution/SL-10.1/inst-source/suse/i586/open-iscsi-0.5.545-9.i586.rpm Preparing... ########################################### [100%] labb:~ #

Now YaST2 believe it's installed, but that also mean that an online update will overwrite our work..

Protect open-iscsi from YOU

We need to tell YOU (online update) that it should not touch our self-compiled open-iscsi. Now that it's in the database, we need to set it to "Taboo".

- Start up YaST2's software management again.

- Search for the package open-iscsi and highlight it.

- Depending on if you run X or ncurses, it looks a bit different:

- In X, right-click the package and select "Taboo - Never install". Save and exit. Done.

- In ncurses, either press your ! key, or press <alt+t> and then <alt+y>. Save and exit. Done.

The package is now protected from the YOU updater, it won't be touched.

|

(Tip) This is not forever.. At a later point when the package is up to date, we'll remove this restriction. |

Connecting to iSCSI with YaST2

To configure iSCSI connections, use YaST2->Network Services->iSCSI Initiator. In SUSE Linux Enterprise products, the module is installed by default. In SL 10.1, you should install yast2-iscsi-client.rpm first.

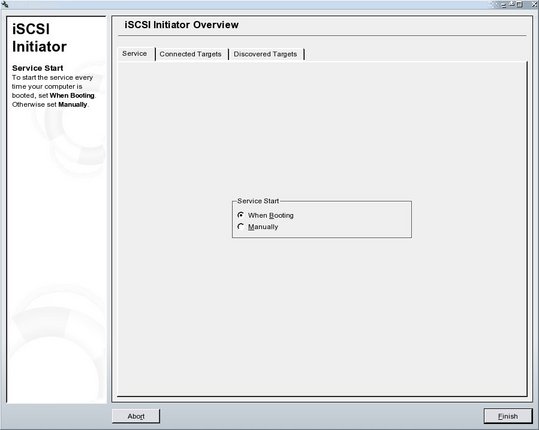

Starting and Stopping the Service

Set the init script status when booting in the first tab "Service". If you select "When Booting", the open-iscsi service is started when you close the YaST module and during start of the system. If you check "Manually", it is stopped when you close the YaST module if no targets are currently connected. Manually start and stop the open-iscsi service with rcopen-iscsi start and rcopen-iscsi stop.

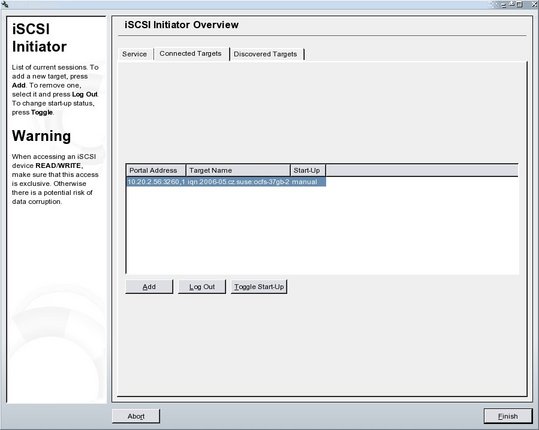

Connecting to Targets

Find all operations related to connecting to targets in the "Connected Targets" tab. Here you can see already connected targets, log out from the selected target, add a new target, and "Toggle Start-Up", which means changing the start-up status of any target (when automatic, it is mounted automatically after booting the system).

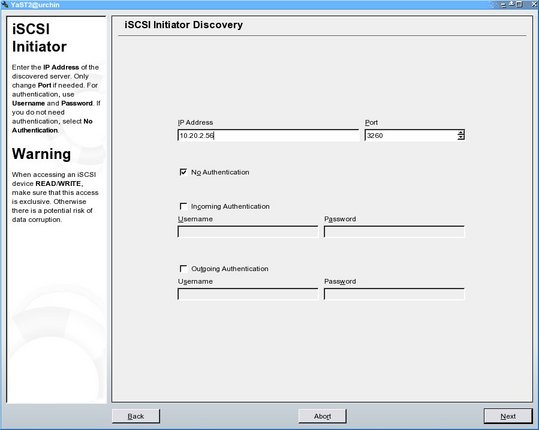

Click "Add". The "iSCSI Initiator Discovery" dialog, shown below, appears. Insert the IP address (do not use a DNS name). If authentication is required, uncheck "No Authentication" then select the authentication type to use and enter the username and password. This authentication is for the discovery process only.

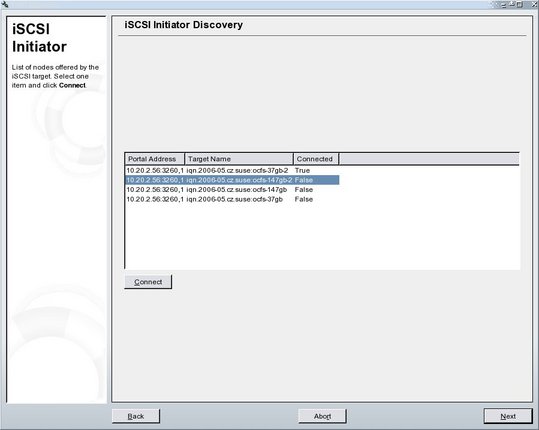

Then discovered targets are listed as shown below. Select the one to which to connect then click "Connect". An authentication dialog appears again. This authentication is for target login. After connection, you are returned to the dialog with discovered targets. The target is labeled as connected. Log in to another target or press "Next" and return to the "Connected Targets" tab.

With "Toggle Start-Up", you can change the status to automatic. This means that the connection to the target is established automatically after reboot if the open-iscsi service is set to "When Booting" in the "Service" tab.